Effective grid models require reliable data

From numerous projects with grid operators we've learned that an insufficient data basis is often perceived as a major hurdle in the process of digitizing the grid. However, experience has shown that getting started is worth it, even if the data situation seems challenging at first. All of our customers have started off with a limited data basis and still made rapid progress through organized and automated data quality processes — without years of preparation.

Example from practice

💡 Recently, we have helped a grid operator from Southern Germany to reduce the share of blackfallen grids from 1.4 % to 0.05% on the medium voltage level, and from 7.5 % to 1.3 % on the low voltage level within six months. At the same time, we have created a digital twin for a grid area of about 19,000 connection points.

No smart grid planning without clean data

High quality data is essential for digitizing and automating processes and ultimately determines whether the energy transition becomes an opportunity or a burden for grid operators. Distribution system operators that have complete and precise grid data can make quicker and more informed decisions:

- Appropriate connection points can be found right away

- Available grid capacities can be identified more transparently

- Grid expansion projects are prioritized more specifically

In reality, however, many grid planners see a great need for improvement when it comes to a reliable data basis. What's especially critical:

- Different formats and sources in system environments that have evolved over time make it more difficult to link data

- The dynamics of the distribution grid, driven by ever-increasing numbers of generators and loads, make it essential to keep data up-to-date

- Missing or inconsistent data makes it difficult to perform precise calculations

No more chaos: Smart solution for systematic data integration

Our response to these challenges: The Intelligent Grid Platform (IGP). As an automated data platform, the IGP connects various source systems, merges different data pools, aggregates and analyzes data, and effectively supports data improvement. Starting off with a limited data basis is no problem: The IGP compensates for missing objects and parameters by applying fallback assumptions that are then validated step by step.

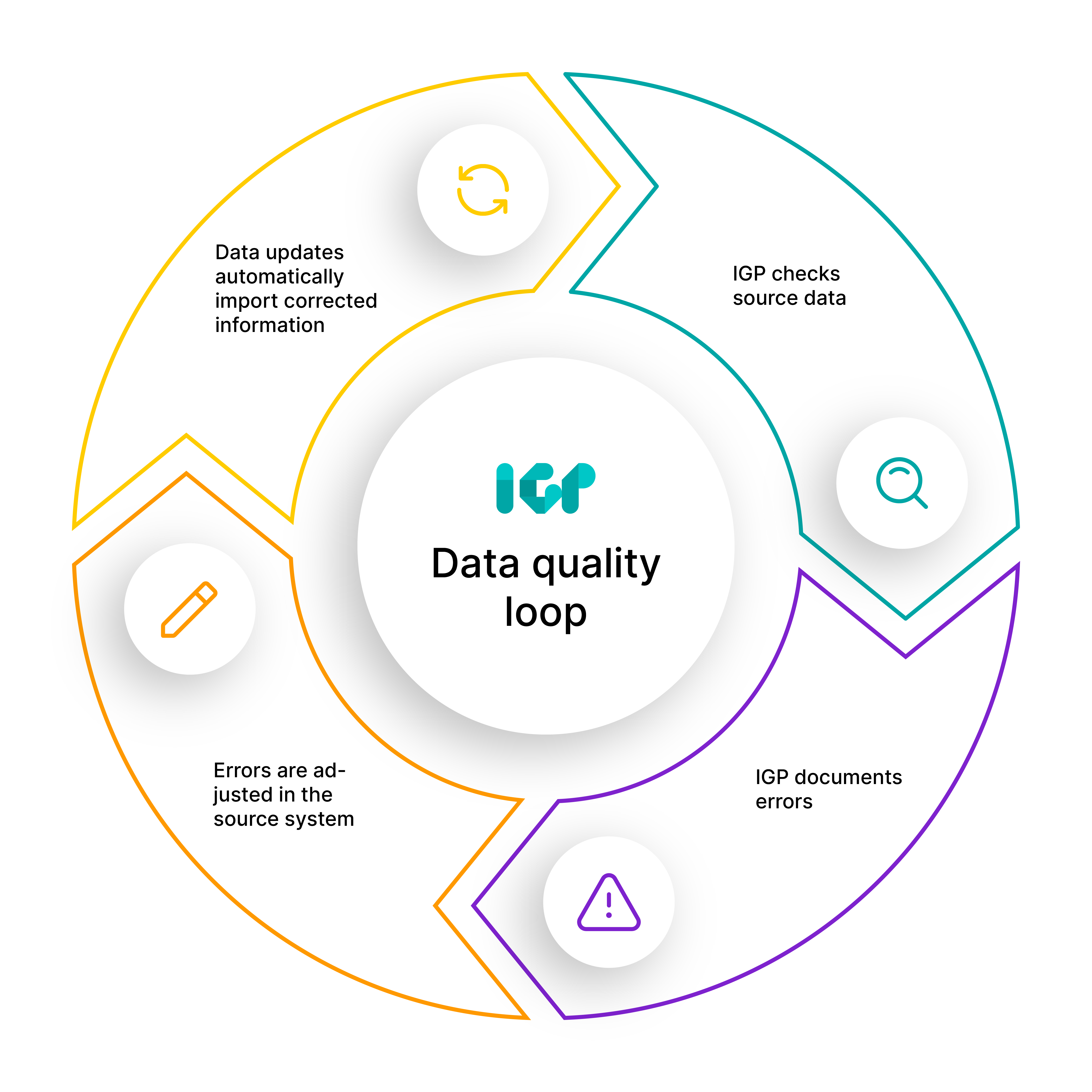

The clear and precise documentation of gaps and anomalies in the data basis enables staff to quickly forward data issues to the appropriate department. This structured process organizes the data and increases transparency, providing a solid foundation for grid planning. The process for automatic data validation is divided into the following steps:

- The sync combines data from source systems and copies them into the internal database

- Elemental testing ensures that the data of each element, e.g. line segments, grid participants, or secondary substations, is tested for plausibility

- Topological testing verifies that galvanically connected elements are energized and at the correct voltage level; it also ensures that outgoing lines of a site are connected to a busbar

- Electrical testing indicates whether calculation results, grid topology and the electrical parameters are plausible

Thanks to this data quality loop, imported data is always validated to guarantee high data quality at all times.

Advantages for grid operators:

- High data quality & continuous data maintenance, guaranteed by a fully automated, efficient process and automated source data testing

- Reporting & documentation of data issues that, for example, have been imported from GIS: This way, the appropriate department is informed and can resolve issues more easily

- Accepting only updated data into the grid model to ensure an up-to-date and high-quality data basis that also benefits other interfaced systems

- Data aggregation & full transparency in a centralized grid model as a single source of truth, including a convenient overview of all data gaps and issues

Success figures from practice

- 75+ successful data onboarding projects

- 280+ implemented interfaces with other systems

- 320,000 modelled grids with 35+ million connection points

Customer opinions

Jonas Bielemeier, Head of Digitalization and Projects at naturenergie netze GmbH

"The IGP combines a number of source systems to create a single grid model, following strict rules. Data issues and inconsistencies are revealed without exception, giving us clear starting points for improving data quality. Combined with regular synchronization for data quality monitoring, the IGP establishes a continuous cycle for quality improvement."

Rafael Echtle, Asset Management, Project Planning and Design, Grid Documentation at E-Werk Netze

"The IGP has played a crucial role in identifying and collecting data issues and has led to improved data quality and a computable grid model in no time."

Mona Keller, Head of Asset Management & Grid Planning at FairNetz GmbH

"It was only after we had introduced the IGP that we managed to identify grids with inadequate data quality. We were then quickly able to detect data inconsistencies and gaps and clean the data step by step.

Dennis Theis, Head of Digital Grid Technologies at Syna GmbH

"After the rollout of the IGP, we were able to work with tangible quality KPIs, such as the number of grids with high utilization. There is no doubt that this has helped raise awareness of data quality significantly.

Conclusion: Reaching the goal with reliable grid data

High-quality data is more than just nice to have — it forms the foundation for digital processes, informed investment decisions and targeted grid expansion. With the IGP, grid operators can automate data integration and cleansing, and maintain high data quality. This paves the way for a resilient power supply — digital and efficient.Also interesting:

Infopaper: Shit In = Shit Out?

Grid Data Quality – The Ultimate Checklist

High data quality is a default precondition for digitalizing and automating many processes that are currently seen as a bottleneck for a fast energy transition. Yet many DSOs we speak to wish their data had much higher quality than it is now. At the heart of this issue lies the problem of “Shit in = Shit out”. When data has been input incorrectly, it affects analytics, insights, planning and investment decisions. In this infopaper, you’ll find how to tackle this problem step by step.

Case Study: FairNetz

Improved data quality through higher grid transparency

This case study builds upon the first part and invites us to follow up on the progress of the implementation of the Intelligent Grid Platform at FairNetz. The electricity network operator from Reutlingen now leverages the improved data quality to speed up the processing of grid connection requests. For instance, 50% of all requests for new connections are assessed positively in under 5 minutes. Additionally, FairNetz relies on the IGP as its “Single Source of Truth” for analyzing the grid participant structure, aiming to further improve the data basis for the calculation logic and create a foundation for sound grid expansion investments.

Want to see our tool live?

Then send us a quick note and we’ll get in touch with you as quickly as possible.

Contact person

Dr. Tobias Falke

VP Global Sales & Marketing